I was working a case a while back and I came across some malware that had time stomping capabilities. There have been numerous posts written on how to use the MFT as a means to determine if time stomping has occurred, so I won't go into too much detail here.

Time Stomping

Time Stomping is an Anti-Forensics technique. Many times, knowing when malware arrived on a system is a question that needs to be answered. If the timestamps of the malware has been changed, ie, time stomped, this can make it difficult to identify a suspicious file as well as answer the question, "When".

Basically there are two "sets" of timestamps that are tracked in the MFT. These two "sets" are the $STANDARD_INFORMATION and $FILE_NAME. Both of these track 4 timestamps each - Modified, Access, Created and Born. Or if you prefer - Created, Last Written, Accessed and Entry Modified (To-mato, Ta-mato). The $STANDARD_INFORMATION timestamps are the ones normally viewed in Windows Explorer as well as most forensic tools.

Most time stomping tools only change the $STANDARD_INFORMATION set. This means that by using tools that display both the $STANDARD_INFORMATION and $FILE_NAME attributes, you can compare the two sets to determine if a file may have been time stomped. If the $STANDARD_INFORMATION predates the $FILE_NAME, it is a possible red flag (example to follow).

In my particular case, by reviewing the suspicious file's $STANDARD_INFORMATION and $FILE_NAME attributes, it was relatively easy to see that there was a mismatch, and thus, combined with other indicators, that time stomping had occurred. Below is an example of what a typical malware time stomped file looked like. As you can see, the $STANDARD_INFORMATION highlighted in red predates the $FILE_NAME dates (test data was used for demonstrative purposes)

System A \test\malware.exe

$STANDARD_INFORMATION

M: Fri Jan 1 07:08:15 2010 Z

A: Tue Oct 7 06:19:23 2014 Z

C: Tue Oct 7 06:19:23 2014 Z

B: Fri Jan 1 07:08:15 2010 Z

$FILE_NAME

M: Thu Oct 2 05:41:56 2014 Z

A: Thu Oct 2 05:41:56 2014 Z

C: Thu Oct 2 05:41:56 2014 Z

B: Thu Oct 2 05:41:56 2014 Z

However, on a couple of systems there were a few outliers where the time stomped malware $STANDARD_INFORMATION and $FILE_NAME modified and born dates matched:

System B \test\malware.exe

$STANDARD_INFORMATION

M: Fri Jan 1 07:08:15 2010 Z

A: Tue Oct 7 06:19:23 2014 Z

C: Tue Oct 7 06:19:23 2014 Z

B: Fri Jan 1 07:08:15 2010 Z

$FILE_NAME

M: Fri Jan 1 07:08:15 2010 Z

A: Thu Oct 2 05:41:56 2014 Z

C: Thu Oct 2 05:41:56 2014 Z

B: Fri Jan 1 07:08:15 2010 Z

Due to other indicators, it was pretty clear that these files were time stomped, however, I was curious to know what may have caused these dates to match, while all the others did not. In effect, it appeared that that Modified and Born dates were time stomped in both the $SI and $FN timestamps, however this was not the MO in all the other instances.

Luckily, I remembered a blog post written by Harlan Carvey where he ran various file system operations and reported the MFT and USN change journal output for these tests. I remembered that during one of his tests, some dates had been copied from the $STANDARD_INFORMATION into the $FILE_NAME attributes. A quick review of his blog post revealed the following had occurred during a rename operation . Below is a quote from Harlan's post:

"I honestly have no idea why the last accessed (A) and creation (B) dates from the $STANDARD_INFORMATION attribute would be copied into the corresponding time stamps of the $FILE_NAME attribute for a rename operation"

In my particular case it was not the accessed date and creation dates (B) that appeared to have been copied, but the modified and creation dates (B). Shoot.. not the same results as Harlan's test... but his system was Windows 7, and the system I was examining was Windows XP. Because my system was different, I decided to follow the procedure Harlan used and do some testing on a Windows XP to see what happened when I did a file rename.

Testing

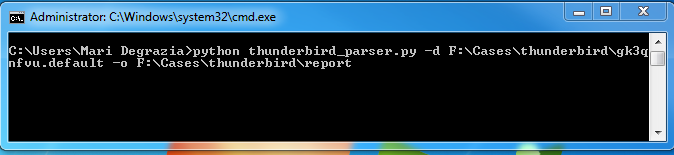

My test system was Widows XP Pro SP3 in a Virtual Box VM. I used FTK Imager to load up the vmdk file after each test and export out the MFT record. I then parsed the MFT record with Harlan Carvey's mft.exe.

First, I created "New Text Document.txt" under My Documents. As expected, all the timestamps in both the $STANDARD_INFORMATION and $FILE_NAME were the same:

12591 FILE Seq: 1 Link: 2 0x38 4 Flags: 1

[FILE]

.\Documents and Settings\Mari\My Documents\New Text Document.txt

M: Thu Oct 2 23:22:05 2014 Z

A: Thu Oct 2 23:22:05 2014 Z

C: Thu Oct 2 23:22:05 2014 Z

B: Thu Oct 2 23:22:05 2014 Z

FN: NEWTEX~1.TXT Parent Ref: 10469 Parent Seq: 1

M: Thu Oct 2 23:22:05 2014 Z

A: Thu Oct 2 23:22:05 2014 Z

C: Thu Oct 2 23:22:05 2014 Z

B: Thu Oct 2 23:22:05 2014 Z

FN: New Text Document.txt Parent Ref: 10469 Parent Seq: 1

M: Thu Oct 2 23:22:05 2014 Z

A: Thu Oct 2 23:22:05 2014 Z

C: Thu Oct 2 23:22:05 2014 Z

B: Thu Oct 2 23:22:05 2014 Z

[RESIDENT]

Next, I used the program SetMACE to change the $STANDARD_INFORMATION timestamps to "2010:07:29:03:30:45:789:1234" . As expected, the $STANDARD_INFORMATION changed, while the $FILE_NAME stayed the same. Once again, this is common to see in files that have been time stomped:

12591 FILE Seq: 1 Link: 2 0x38 4 Flags: 1

[FILE]

.\Documents and Settings\Mari\My Documents\New Text Document.txt

M: Wed Jul 29 03:30:45 2010 Z

A: Wed Jul 29 03:30:45 2010 Z

C: Wed Jul 29 03:30:45 2010 Z

B: Wed Jul 29 03:30:45 2010 Z

FN: NEWTEX~1.TXT Parent Ref: 10469 Parent Seq: 1

M: Thu Oct 2 23:22:05 2014 Z

A: Thu Oct 2 23:22:05 2014 Z

C: Thu Oct 2 23:22:05 2014 Z

B: Thu Oct 2 23:22:05 2014 Z

FN: New Text Document.txt Parent Ref: 10469 Parent Seq: 1

M: Thu Oct 2 23:22:05 2014 Z

A: Thu Oct 2 23:22:05 2014 Z

C: Thu Oct 2 23:22:05 2014 Z

B: Thu Oct 2 23:22:05 2014 Z

Next, I used the rename command via the command prompt to rename the file from New Text Document.txt to "Renamed Text Document.txt" (I know - creative naming). The interesting thing here is, unlike the Windows 7 test where two dates were copied over, all four dates were copied over from the original files $STANDARD_INFORMATION into the $FILE_NAME:

12591 FILE Seq: 1 Link: 2 0x38 6 Flags: 1

[FILE]

.\Documents and Settings\Mari\My Documents\Renamed Text Document.txt

M: Wed Jul 29 03:30:45 2010 Z

A: Wed Jul 29 03:30:45 2010 Z

C: Thu Oct 2 23:38:36 2014 Z

B: Wed Jul 29 03:30:45 2010 Z

FN: RENAME~1.TXT Parent Ref: 10469 Parent Seq: 1

M: Wed Jul 29 03:30:45 2010 Z

A: Wed Jul 29 03:30:45 2010 Z

C: Wed Jul 29 03:30:45 2010 Z

B: Wed Jul 29 03:30:45 2010 Z

FN: Renamed Text Document.txt Parent Ref: 10469 Parent Seq: 1

M: Wed Jul 29 03:30:45 2010 Z

A: Wed Jul 29 03:30:45 2010 Z

C: Wed Jul 29 03:30:45 2010 Z

B: Wed Jul 29 03:30:45 2010 Z

Based upon my testing, a rename could have caused the 2010 dates to be the same in both the $SI and $FN attributes in my outliers. This scenario "in the wild" makes sense...the malware is dropped on the system, time stomped, then renamed to a file name that is less conspicuous on the system. This sequence of events on a Windows XP system may make it difficult to use the MFT analysis alone to identify time stomping.

So what if you run across a file where you suspect this may be the case? On Windows XP you could check the restore points change.log files. These files track changes such as file creations and renames. Once again, Mr. HC has a perl script that parses these change log files, lscl.pl. If you see a file creation and a rename, you can use the restore point as a guideline to when the file was created and renamed on the system.

You could also parse the USN change journal to see if and when the suspected file had been created and renamed. Tools such as Triforce or Harlan's usnj.pl do a great job.

If the change.log file and and journal file do not go back far enough, checking the compile date of the suspicious file with program like CFF Explorer may also help shed some light. If a program has a compile date years after the born date,.. red flag.

I don't think anything I've found is ground breaking or new. In fact,the Forensics Wiki entry on timestomp demonstrates this behavior with time stomping and moved files, but I thought I would share anyways.

Happy hunting, and may the odds be ever in your favor...

.png)